Marianne de Heer Kloots, Martijn Bentum, Tom Lentz, Hosein Mohebbi, Charlotte Pouw, Gaofei Shen, Willem Zuidema, Grzegorz Chrupała

This reading list accompanies our tutorial and upcoming survey. It provides an overview of recent publications reporting interpretability analyses on speech processing models, though it is not a complete list. Is your favourite paper missing or notice an error? Please submit a pull request to suggest additions or changes!

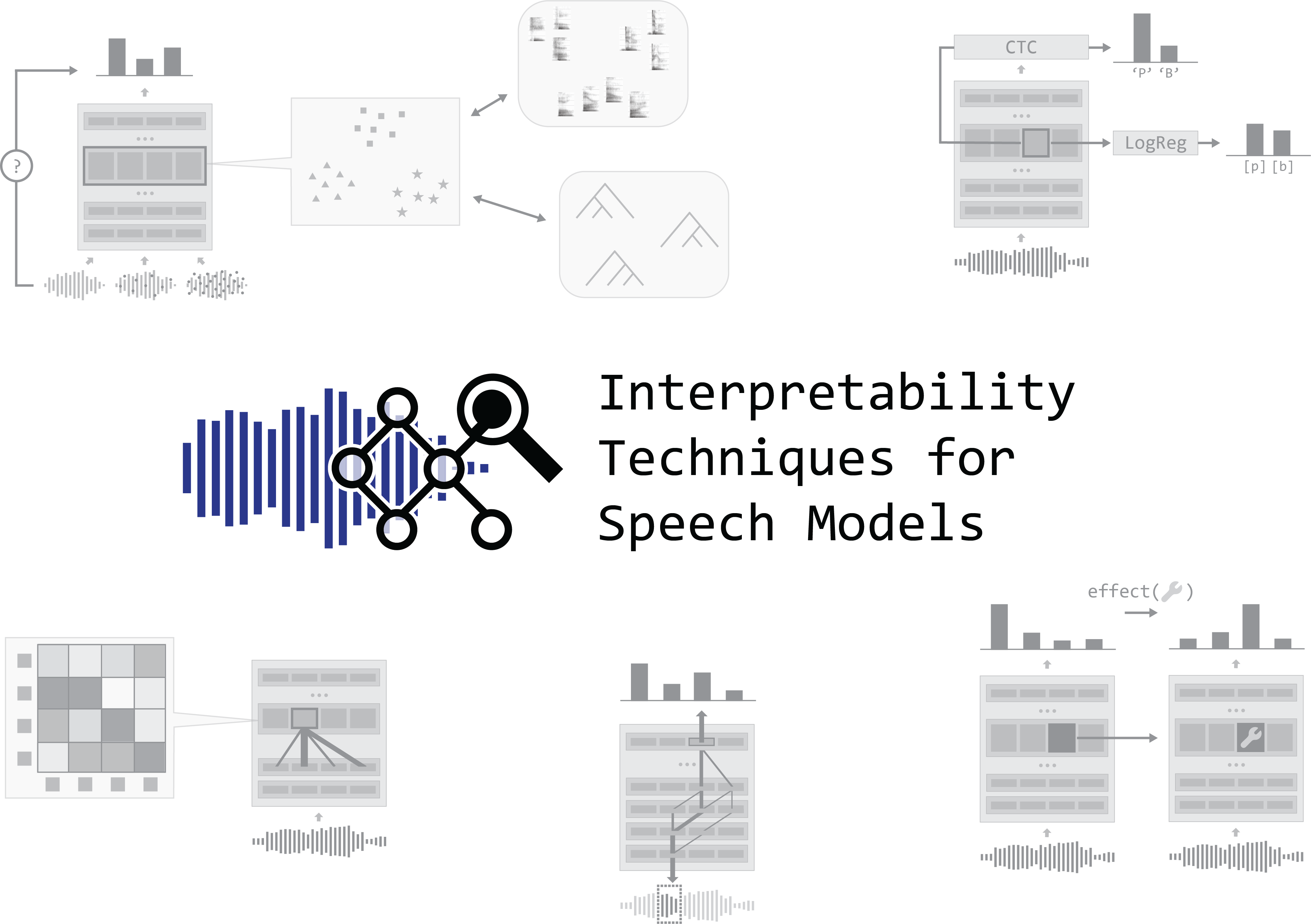

Behavioural analyses focus on interpreting model functioning by identifying systematic patterns in generated output and mapping them to interpretable dimensions in model input (naturalness, grammaticality, noise).

Adolfi, F., Bowers, J. S., & Poeppel, D. (2023). Successes and critical failures of neural networks in capturing human-like speech recognition. Neural Networks, 162, 199–211. https://doi.org/10.1016/j.neunet.2023.02.032

De Seyssel, M., Lavechin, M., Titeux, H., Thomas, A., Virlet, G., Revilla, A. S., Wisniewski, G., Ludusan, B., Dupoux, E. (2023). ProsAudit, a prosodic benchmark for self-supervised speech models. https://doi.org/10.21437/Interspeech.2023-438

Gessinger, I., Amirzadeh Shams, E., & Carson-Berndsen, J. (2025). Under the Hood: Phonemic Restoration in Transformer-Based Automatic Speech Recognition. Rochester, NY: Social Science Research Network. https://doi.org/10.2139/ssrn.5212722

Kim, S.-E., Chernyak, B. R., Seleznova, O., Keshet, J., Goldrick, M., & Bradlow, A. R. (2024). Automatic recognition of second language speech-in-noise. JASA Express Letters, 4(2), 025204. https://doi.org/10.1121/10.0024877

Lavechin, M., Sy, Y., Titeux, H., Blandón, M. A. C., Räsänen, O., Bredin, H., Dupoux, E., Cristia, A. (2023). BabySLM: language-acquisition-friendly benchmark of self-supervised spoken language models. https://doi.org/10.21437/Interspeech.2023-978

Orhan, P., Boubenec, Y., & King, J.-R. (2024). Algebraic structures emerge from the self-supervised learning of natural sounds. https://doi.org/10.1101/2024.03.13.584776

Patman, C., & Chodroff, E. (2024). Speech recognition in adverse conditions by humans and machines. https://doi.org/10.1121/10.0032473

Pouw, C., Alishahi, A., & Zuidema, W. (2025). A Linguistically Motivated Analysis of Intonational Phrasing in Text-to-Speech Systems: Revealing Gaps in Syntactic Sensitivity. In Proceedings of the 29th Conference on Computational Natural Language Learning, pages 126–140, Vienna, Austria. https://aclanthology.org/2025.conll-1.9/

Shim, H.E., Yung, O., Tuttösí, P., Kwan, B., Lim, A., Wang, Y., Yeung, H.H. (2025) Generating Consistent Prosodic Patterns from Open-Source TTS Systems. Proc. Interspeech 2025, 5383-5387. https://doi.org/10.21437/Interspeech.2025-2159

Tånnander, C., House, D., & Edlund, J. (2022). Syllable duration as a proxy to latent prosodic features. In (pp. 220– 224). https://doi.org/10.21437/SpeechProsody.2022-45

Weerts, L., Rosen, S., Clopath, C., & Goodman, D. F. M. (2022). The Psychometrics of Automatic Speech Recognition. bioRxiv. https://doi.org/10.1101/2021.04.19.440438

Zhu, J. (2020). Probing the phonetic and phonological knowledge of tones in Mandarin TTS models. https://doi.org/10.21437/SpeechProsody.2020-190

Representational analyses aim to characterize how model-internal representations are structured, including their organization across layers and alignment with interpretable features.

Abdullah, B. M., Shaik, M. M., Möbius, B., & Klakow, D. (2023). An information-theoretic analysis of self-supervised discrete representations of speech. In Interspeech 2023 (pp. 2883–2887). https://doi.org/10.21437/Interspeech.2023-2131

Algayres, R., Adi, Y., Nguyen, T., Copet, J., Synnaeve, G., Sagot, B., & Dupoux, E. (2023). Generative Spoken Language Model based on continuous word-sized audio tokens. In H. Bouamor, J. Pino, & K. Bali (Eds.), Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (pp. 3008–3028). Singapore: Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.emnlp-main.182

Algayres, R., Ricoul, T., Karadayi, J., Laurençon, H., Zaiem, S., Mohamed, A., Sagot, B. Dupoux, E. (2022). DP-parse: Finding word boundaries from raw speech with an instance lexicon. Transactions of the Association for Computational Linguistics, 10, 1051-1065. https://doi.org/10.1162/tacl_a_00505

Ashihara, T., Moriya, T., Matsuura, K., Tanaka, T., Ijima, Y., Asami, T., Delcroix, M., Honma, Y. (2023). SpeechGLUE: How well can self-supervised speech models capture linguistic knowledge? In Proc. Interspeech 2023 (pp. 2888–2892). https://doi.org/10.21437/Interspeech.2023-1823

Ashihara, T., Delcroix, M., Ochiai, T., Matsuura, K., Horiguchi, S. (2025) Analysis of Semantic and Acoustic Token Variability Across Speech, Music, and Audio Domains. Proc. Interspeech 2025, 226-230, https://doi.org/10.21437/Interspeech.2025-945

Bentum, M., Bosch, L. t., & Lentz, T. (2024). The Processing of Stress in End-to-End Automatic Speech Recognition Models. In (pp. 2350–2354). https://doi.org/10.21437/Interspeech.2024-44

Bentum, M., ten Bosch, L., Lentz, T.O. (2025) Word stress in self-supervised speech models: A cross-linguistic comparison. Proc. Interspeech 2025, 251-255. https://doi.org/10.21437/Interspeech.2025-106

Choi, K., Pasad, A., Nakamura, T., Fukayama, S., Livescu, K., & Watanabe, S. (2024). Self-Supervised Speech Representations are More Phonetic than Semantic. In (pp. 4578–4582). https://doi.org/10.21437/Interspeech.2024-1157

Chrupała, G., Higy, B., & Alishahi, A. (2018) Analyzing analytical methods: The case of phonology in neural models of spoken language. In D. Jurafsky, J. Chai, N. Schluter, & J. Tetreault (Eds.), Proceedings of the 58th annual meeting of the Association for Computational Linguistics (pp. 4146–4156). https://doi.org/10.18653/v1/2020.acl-main.381

Cormac English, P., Kelleher, J. D., & Carson-Berndsen, J. (2022). Domain-Informed Probing of wav2vec 2.0 Embeddings for Phonetic Features. In G. Nicolai & E. Chodroff (Eds.), Proceedings of the 19th SIGMORPHON Workshop on Computational Research in Phonetics, Phonology, and Morphology (pp. 83–91). https://doi.org/10.18653/v1/2022.sigmorphon-1.9

de Heer Kloots, M., & Zuidema, W. (2024). Human-like Linguistic Biases in Neural Speech Models: Phonetic Categorization and Phonotactic Constraints in Wav2Vec2.0. In (pp. 4593–4597). https://doi.org/10.21437/Interspeech.2024-2490

de Heer Kloots, M., Mohebbi, H., Pouw, C., Shen, G., Zuidema, W., Bentum, M. (2025) What do self-supervised speech models know about Dutch? Analyzing advantages of language-specific pre-training. Proc. Interspeech 2025, 256-260. https://doi.org/10.21437/Interspeech.2025-1526

Dieck, T. t., Pérez-Toro, P. A., Arias, T., Noeth, E., & Klumpp, P. (2022). Wav2vec behind the Scenes: How end2end Models learn Phonetics. In (pp. 5130–5134). https://doi.org/10.21437/Interspeech.2022-10865

Dunbar, E., Hamilakis, N., & Dupoux, E. (2022). Self-supervised language learning from raw audio: Lessons from the zero resource speech challenge. IEEE Journal of Selected Topics in Signal Processing, 16(6), 1211–1226.

Ersoy, A., Mousi, B.A., Chowdhury, S.A., Alam, F., Dalvi, F.I., Durrani, N. (2025) From Words to Waves: Analyzing Concept Formation in Speech and Text-Based Foundation Models. Proc. Interspeech 2025, 241-245. https://doi.org/10.21437/Interspeech.2025-2180

Fucci, D., Gaido, M., Negri, M., Bentivogli, L., Martins, A., & Attanasio, G. (2025). Different Speech Translation Models Encode and Translate Speaker Gender Differently. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 2: Short Papers), pages 1005–1019, Vienna, Austria. https://aclanthology.org/2025.acl-short.78/

Higy, B., Gelderloos, L., Alishahi, A., & Chrupała, G. (2021). Discrete representations in neural models of spoken language. In J. Bastings et al. (Eds.), Proceedings of the fourth blackboxnlp workshop on analyzing and interpreting neural networks for nlp (pp. 163–176). https://doi.org/10.18653/v1/2021.blackboxnlp-1.11

Huo, R., Dunbar, E. (2025) Iterative Refinement, Not Training Objective, Makes HuBERT Behave Differently from wav2vec 2.0. Proc. Interspeech 2025, 261-265. https://doi.org/10.21437/Interspeech.2025-514

Langedijk, A., Mohebbi, H., Sarti, G., Zuidema, W., & Jumelet, J. (2024). DecoderLens: Layerwise Interpretation of Encoder-Decoder Transformers. In K. Duh, H. Gomez, & S. Bethard (Eds.), Findings of the Association for Computational Linguistics: NAACL 2024 (pp. 4764–4780). Mexico City, Mexico: Association for Computational Linguistics. https://doi.org/10.18653/v1/2024.findings-naacl.296

Liu, O.D., Tang, H., Goldwater, S. (2023) Self-supervised Predictive Coding Models Encode Speaker and Phonetic Information in Orthogonal Subspaces. Proc. Interspeech 2023, 2968-2972. https://doi.org/10.21437/Interspeech.2023-871

Liu, O. D., Tang, H., Feldman, N. H., & Goldwater, S. (2024). A predictive learning model can simulate temporal dynamics and context effects found in neural representations of continuous speech. In Proceedings of the Annual Meeting of the Cognitive Science Society (Vol. 46). https://escholarship.org/uc/item/34b4v268

Ma, D., Ryant, N., & Liberman, M. (2021). Probing acoustic representations for phonetic properties. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (p. 311-315). https://doi.org/10.1109/ICASSP39728.2021.9414776

Millet, J., & Dunbar, E. (2022). Do self-supervised speech models develop human-like perception biases? In S. Muresan, P. Nakov, & A. Villavicencio (Eds.), Proceedings of the 60th Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers) (pp. 7591–7605). Dublin, Ireland: Association for Computational Linguistics. https://doi.org/10.18653/v1/2022.acl-long.523

Mohamed, M., Liu, O.D., Tang, H., Goldwater, S. (2024) Orthogonality and isotropy of speaker and phonetic information in self-supervised speech representations. Proc. Interspeech 2024, 3625-3629. https://doi.org/10.21437/Interspeech.2024-1054

Pasad, A., Chou, J.-C., & Livescu, K. (2021). Layer-wise analysis of a self-supervised speech representation model. IEEE Automatic Speech Recognition and Understanding workshop (ASRU) (p. 914-921). https://doi.org/10.1109/ASRU51503.2021.9688093

Schatz, T. (2016). ABX-Discriminability Measures and Applications (Theses, Université Paris 6 (UPMC)). https://hal.science/tel-01407461

Shen, G., Alishahi, A., Bisazza, A., & Chrupała, G. (2023). Wave to Syntax: Probing spoken language models for syntax. In Interspeech 2023 (pp. 1259–1263). https://doi.org/10.21437/Interspeech.2023-679

Sicherman, A., & Adi, Y. (2023). Analysing discrete self supervised speech representation for spoken language modeling. In IEEE International Conference on Acoustics, Speech and Signal processing (ICASSP) (pp. 1–5). https://doi.org/10.1109/ICASSP49357.2023.10097097

ten Bosch, L., Bentum, M., & Boves, L. (2023). Phonemic competition in end-to-end ASR models. In (pp. 586–590). https://doi.org/10.21437/Interspeech.2023-1846

Wells, D., Tang, H., & Richmond, K. (2022). Phonetic analysis of self-supervised representations of English speech. In Proc. Interspeech (pp. 3583–3587). https://doi.org/10.21437/Interspeech.2022-10884

These methods aim to quantify the relative importance of input segments for model representations (context mixing) or for model predictions (feature attribution).

Fucci, D., Gaido, M., Savoldi, B., Negri, M., Cettolo, M., & Bentivogli, L. (2024). SPES: Spectrogram Perturbation for Explainable Speech-to-Text Generation. https://doi.org/10.48550/arXiv.2411.01710

Fucci, D., Savoldi, B., Gaido, M., Negri, M., Cettolo, M., & Bentivogli, L. (2024). Explainability for Speech Models: On the Challenges of Acoustic Feature Selection. In F. Dell’Orletta, A. Lenci, S. Montemagni, & R. Sprugnoli (Eds.), Proceedings of the 10th Italian Conference on Computational Linguistics (CLiC-it 2024) (pp. 373–381). https://aclanthology.org/2024.clicit-1.45/

Fucci, D., Gaido, M., Negri, M., Cettolo, M., Bentivogli, L. (2025) Echoes of Phonetics: Unveiling Relevant Acoustic Cues for ASR via Feature Attribution. Proc. Interspeech 2025, 206-210. https://doi.org/10.21437/Interspeech.2025-918

Gupta, S., Ravanelli, M., Germain, P., & Subakan, C. (2024). Phoneme Discretized Saliency Maps for Explainable Detection of AI-Generated Voice. In (pp. 3295–3299). https://doi.org/10.21437/Interspeech.2024-632

Mancini, E., Paissan, F., Torroni, P., Ravanelli, M., & Subakan, C. (2024). Investigating the Effectiveness of Explainability Methods in Parkinson’s Detection from Speech. https://arxiv.org/abs/2411.08013

Meng, Y., Goldwater, S., Tang, H. (2025) Effective Context in Neural Speech Models. Proc. Interspeech 2025, 246-250, https://doi.org/10.21437/Interspeech.2025-1932

Mohebbi, H., Chrupała, G., Zuidema, W., & Alishahi, A. (2023). Homophone Disambiguation Reveals Patterns of Context Mixing in Speech Transformers. In H. Bouamor, J. Pino, & K. Bali (Eds.), Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing (pp. 8249–8260). Singapore: Association for Computational Linguistics. https://doi.org/10.18653/v1/2023.emnlp-main.513

Muckenhirn, H., Abrol, V., Magimai-Doss, M., & Marcel, S. (2019). Understanding and visualizing raw waveform-based CNNs. In Interspeech 2019 (pp. 2345–2349). https://doi.org/10.21437/Interspeech.2019-2341

Parekh, J., Parekh, S., Mozharovskyi, P., d’Alché-Buc, F., & Richard, G. (2022). Listen to Interpret: Post-hoc Interpretability for Audio Networks with NMF. https://doi.org/10.48550/arXiv.2202.11479

Pastor, E., Koudounas, A., Attanasio, G., Hovy, D., & Baralis, E. (2023). Explaining speech classification models via word-level audio segments and paralinguistic features. https://doi.org/10.48550/arXiv.2309.07733

Peng, P., & Harwath, D. (2022). Word Discovery in Visually Grounded, Self-Supervised Speech Models. In Interspeech 2023 (pp. 2823–2827). https://doi.org/10.21437/Interspeech.2022-10652

Peng, P., Li, S.-W., Räsänen, O., Mohamed, A., & Harwath, D. (2023). Syllable Discovery and Cross-Lingual Generalization in a Visually Grounded, Self-Supervised Speech Model. In Interspeech 2023 (pp. 391–395). https://doi.org/10.21437/Interspeech.2023-2044

Prasad, A., & Jyothi, P. (2020). How Accents Confound: Probing for Accent Information in End-to-End Speech Recognition Systems. In D. Jurafsky, J. Chai, N. Schluter, & J. Tetreault (Eds.), Proceedings of the 58th Annual Meeting of the Association for Computational Linguistics (pp. 3739–3753). Online: Association for Computational Linguistics. https://doi.org/10.18653/v1/2020.acl-main.345

Schnell, B., & Garner, P. N. (2021). Improving Emotional TTS with an Emotion Intensity Input from Unsupervised Extraction. In 11th ISCA Speech Synthesis Workshop (SSW 11) (pp. 60–65). ISCA. https://doi.org/10.21437/SSW.2021-11

Shams, E. A., Gessinger, I., & Carson-Berndsen, J. (2024). Uncovering Syllable Constituents in the Self-Attention-Based Speech Representations of Whisper. In Proceedings of the 7th BlackboxNLP Workshop: Analyzing and Interpreting Neural Networks for NLP (pp. 238-247). https://doi.org/10.18653/v1/2024.blackboxnlp-1.16

Shen, G., Mohebbi, H., Bisazza, A., Alishahi, A., Chrupala, G. (2025) On the reliability of feature attribution methods for speech classification. Proc. Interspeech 2025, 266-270. https://doi.org/10.21437/Interspeech.2025-1911

Shim, K., Choi, J., & Sung, W. (2022). Understanding the role of self attention for efficient speech recognition. In International Conference on Learning Representations. https://openreview.net/forum?id=AvcfxqRy4Y

Sivasankaran, S., Vincent, E., & Fohr, D. (2021). Explaining Deep Learning Models for Speech Enhancement. In Interspeech 2021 (pp. 696–700). https://doi.org/10.21437/Interspeech.2021-1764

Singhvi, D., Misra, D., Erkelens, A., Jain, R., Papadimitriou, I., & Saphra, N. (2025). Using Shapley interactions to understand how models use structure. In Proceedings of the 63rd Annual Meeting of the Association for Computational Linguistics (Volume 1: Long Papers), pages 20727–20737, Vienna, Austria. https://aclanthology.org/2025.acl-long.1011/

Wu, X., Bell, P., & Rajan, A. (2023). Explanations for Automatic Speech Recognition. https://doi.org/10.48550/arXiv.2302.14062

Wu, X., Bell, P., & Rajan, A. (2024). Can We Trust Explainable AI Methods on ASR? An Evaluation on Phoneme Recognition. In IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 10296–10300). https://doi.org/10.1109/ICASSP48485.2024.10445989

Yang, S.-W., Liu, A. T., & yi Lee, H. (2020). Understanding Self-Attention of Self-Supervised Audio Transformers. In Interspeech 2020. https://doi.org/10.21437/Interspeech.2020-2231

These methods aim to identify features and (networks of) components which causally affect model output behaviour.

Futami, H., Arora, S., Kashiwagi, Y., Tsunoo, E. Watanabe, S. (2024). Finding Task-specific Subnetworks in Multi-task Spoken Language Understanding Model. In Interspeech 2024(802–806). https://doi.org/10.21437/Interspeech.2024-712

Krishnan, A., Abdullah, B.M. Klakow, D. (2024). On the Encoding of Gender in Transformer-based ASR Representations. In Interspeech 2024 (3090–3094). https://doi.org/10.21437/Interspeech.2024-2209

Lai, C.I.J., Cooper, E., Zhang, Y., Chang, S., Qian, K., Liao, Y.L., Glass, J. (2022). On the Interplay between Sparsity, Naturalness, Intelligibility, and Prosody in Speech Synthesis On the interplay between sparsity, naturalness, intelligibility, and prosody in speech synthesis. IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP). https://doi.org/10.1109/ICASSP43922.2022.9747728

Lin, T.Q., Lin, G.T., Lee, H., Tang, H. (2024). Property Neurons in Self-Supervised Speech Transformers. SLT 2024. https://doi.org/10.48550/arXiv.2409.05910

Pouw, C., de Heer Kloots, M., Alishahi, A. Zuidema, W. (2024). Perception of Phonological Assimilation by Neural Speech Recognition Models Perception of Phonological Assimilation by Neural Speech Recognition Models. Computational Linguistics. https://doi.org/10.1162/coli_a_00526