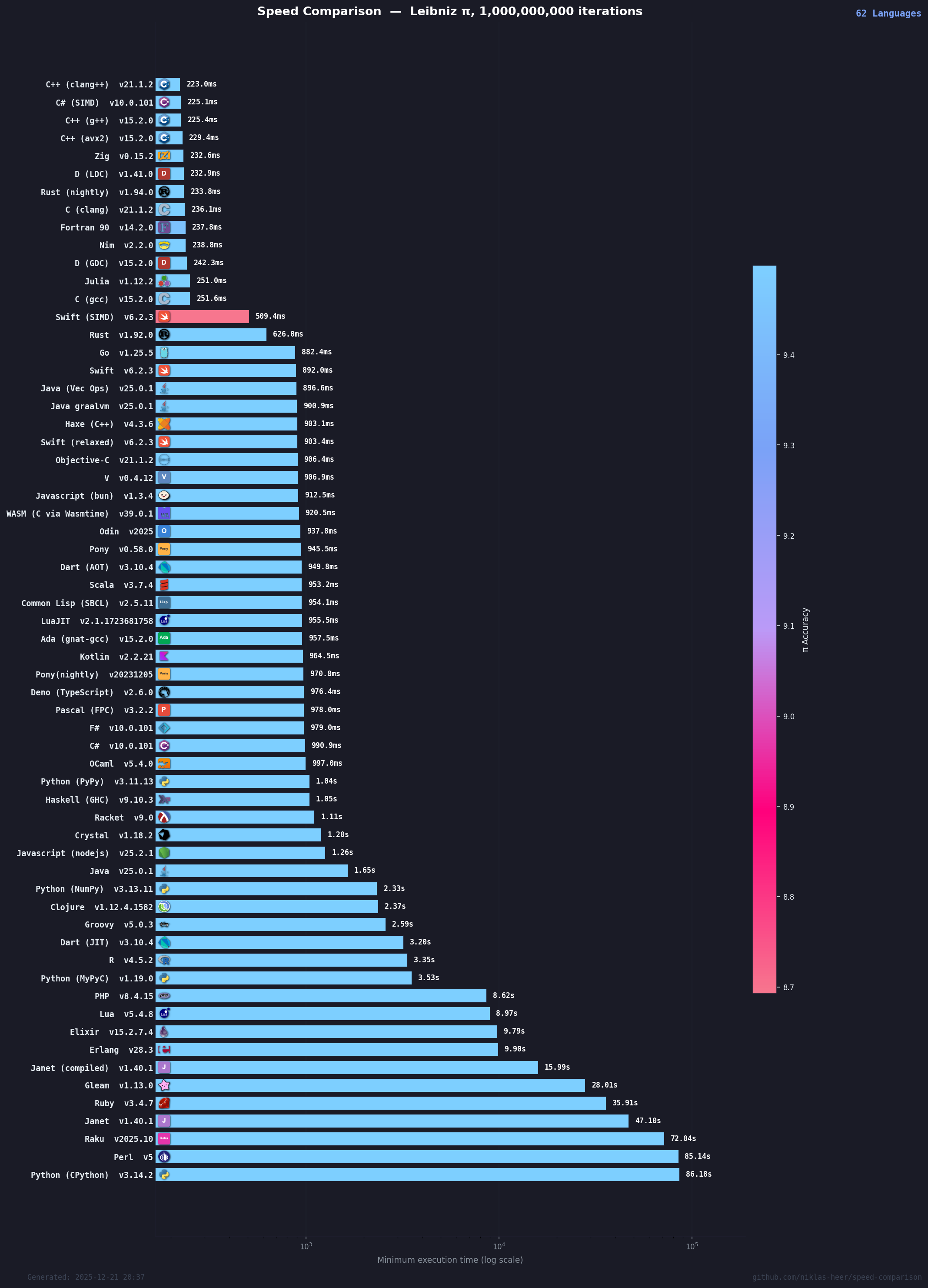

This projects tries to compare the speed of different programming languages.

In this project we don't really care about getting a precise calculation of pi. We only want to see how fast are the programming languages doing.

It uses an implementation of the Leibniz formula for π to do the comparison.

Here is a video which explains how it works: Calculating π by hand

You can find the results here: https://niklas-heer.github.io/speed-comparison/

I'm no expert in all these languages, so take my results with a grain of salt.

This is a microbenchmark. It can certainly give you some clue about a language, but it doesn't tell you the whole picture.

Also the findings just show how good a language is (or can be) at loops and floating-point math, which is just a small subset of a programming language.

You are also welcome to contribute and help me fix my possible horrible code in some languages. 😄

The benchmark measures single-threaded computational performance. To keep comparisons fair:

-

No concurrency/parallelism: Implementations must be single-threaded. No multi-threading, async, or parallel processing.

-

SIMD is allowed but separate: SIMD optimizations (using wider registers) are permitted but should be separate targets (e.g.,

swift-simd,cpp-avx2) rather than replacing the standard implementation. -

Standard language features: Use idiomatic code for the language. Compiler optimizations flags are fine.

-

Same algorithm: All implementations must use the Leibniz formula as shown in the existing implementations.

Why no concurrency? Concurrency results depend heavily on core count (4-core vs 64-core gives vastly different results), making comparisons meaningless. SIMD stays single-threaded - it just processes more data per instruction.

The benchmarks run on Ubicloud standard-4 runners:

CPU: 4 vCPUs (2 physical cores) on AMD EPYC 9454P processors

RAM: 16 GB

Storage: NVMe SSDs

OS: Ubuntu 24.04

See Ubicloud Runner Types for more details.

Everything is run by a Docker container and a bash script which envokes the programs.

To measure the execution time a python package is used.

Docker- earthly

Earthly allows to run everything with a single command:

earthly +allThis will run all tasks to collect all measurements and then run the analysis.

To collect data for all languages run:

earthly +collect-dataTo collect data for a single language run:

earthly +rust # or any other language targetLanguage targets are auto-discovered from the Earthfile. You can list them with:

./scripts/discover-languages.shTo generate the combined CSV and chart from all results:

earthly +analysisFor quick testing, run only a subset of fast languages:

earthly +fast-check # runs: c, go, rust, cpythonThe project uses GitHub Actions with a parallel matrix build:

- Auto-discovery: Language targets are automatically detected from the Earthfile

- Parallel execution: All 43+ languages run simultaneously in separate jobs

- Isolation: Each language gets a fresh runner environment

- Results collection: All results are merged and analyzed together

- Auto-publish: Results are published to GitHub Pages

Repository maintainers can trigger benchmarks on PRs using comments:

/bench rust go c # Run specific languages

enable-ci: Trigger full benchmark suite on a PRskip-ci: Skip the fast-check on a PR

This project uses an AI-powered CI workflow to keep all programming languages up to date automatically.

- Weekly Check: A scheduled workflow runs every Monday at 6 AM UTC

- Version Detection: Checks for new versions via:

- Docker Hub Registry API (for official language images)

- GitHub Releases API (for languages like Zig, Nim, Gleam)

- Alpine package index (for Alpine-based packages)

- Automated Updates: Claude Code (via OpenRouter) updates the Earthfile with new versions

- Validation: Runs a quick benchmark to verify the update compiles and runs correctly

- Breaking Changes: If the build fails, Claude Code (Opus) researches and fixes breaking changes (up to 3 attempts)

- PR Creation: Creates a PR for review if successful, or an issue describing the failure if not

You can manually trigger a version check:

- Go to Actions → Version Check → Run workflow

- Optionally specify a single language name to check only that one

- Enable "Dry run" to check versions without creating PRs

Version sources are defined in scripts/version-sources.json. Each language maps to:

source: Where to check for updates (docker, github, alpine, apt)imageorrepo: The Docker image or GitHub repositoryearthfile_pattern: Regex to extract current version from Earthfilesource_file: The source code file for this language

Why do you also count reading a file and printing the output?

Because I think this is a more realistic scenario to compare speeds.

Are the compile times included in the measurements?

No they are not included, because when running the program in the real world this would also be done before.

Isn't this just measuring startup time for fast languages?

No. The benchmark runs 1 billion iterations. Testing with Zig by timing segments inside the program:

- Startup + file read: ~0.01ms

- Computation: ~200ms

- Overhead: ~0.01%

Even at 1 million iterations, startup would only be ~4% overhead. At 1 billion, it's essentially zero.

Why is C++ (AVX2) slower than regular C++?

The standard C++ uses i & 0x1 which lets the compiler auto-vectorize. With -O3 -ffast-math -march=native, modern compilers do this extremely well. The explicit AVX2 version has overhead from manual vector setup and horizontal sum operations. Often compiler auto-vectorization beats hand-written SIMD for simple loops.

Why are Crystal/Odin/Ada so slow?

All three use the x = -x pattern which creates a loop-carried dependency that blocks auto-vectorization. The fast implementations use the branchless i & 0x1 trick instead, which allows the compiler to vectorize the loop.

Does Zig use fast-math?

Yes. Zig uses @setFloatMode(.optimized) which is equivalent to -ffast-math. This is documented in the source code.

Does Julia use fast-math and SIMD?

Yes. Julia uses @fastmath @simd for - both annotations together. The @simd enables vectorization hints (similar to compiler auto-vectorization), while @fastmath relaxes floating-point strictness.

Why is Nim faster than C?

Both compile to native code via gcc with similar flags. The marginal difference is likely measurement variance. Nim's code explicitly uses cuint to match C's unsigned int type for the loop counter.

Some implementations aren't optimized / weren't written by experts

Fair point. I'm not an expert in all 40+ languages. The goal was idiomatic-ish code, but some implementations could definitely be improved. That's why PRs are always welcome! For example, Swift has 3 variants (standard, relaxed, SIMD) showing different optimization levels.

This benchmark shows what performance you can expect when someone not deeply versed in a language writes the code - which is actually a useful data point.

Which languages use -ffast-math or equivalent?

| Language | Fast-math | Notes |

|---|---|---|

| C/C++ (gcc/clang) | -ffast-math |

Full optimizations |

| D (GDC/LDC) | -ffast-math |

Full optimizations |

| Zig | @setFloatMode(.optimized) |

Equivalent to fast-math |

| Julia | @fastmath |

Plus @simd hint |

| Fortran | No | Uses manual loop unrolling instead |

| Rust | No | But uses vectorizable pattern |

See all contributors on the Contributors page.

For creating hyperfine which is used for the fundamental benchmarking.

This projects takes inspiration from Thomas who did a similar comparison on his blog.